Having said all that, the content of a Buffer may be quickly converted to a String using the toString() method: const b = om() For example, the French saying "Il vaut mieux prévenir que guérir" is equivalent to the following sequence of bytes when it's encoded using UTF-8 (the bytes are shown in hexadecimal): I It uses 1 byte for the ASCII characters, and 2 bytes for most European and Middle-East scripts - but it's less efficient for Asian scripts, requiring 3 bytes or more. On the web, the most widely used Unicode encoding is UTF-8, because it's reasonably efficient for many uses.

Nodejs buffer to string code#

Except for UCS-2, which uses a fixed width for all code points (thus preventing it to represent all Unicode characters), all of these encodings use a variable number of bits to encode each code point. The most common encodings for Unicode are UTF-16, UCS-2, UTF-32, and UTF-8. The Unicode standard also defines a number of generic encodings that are able to encode every Unicode code point. Their objective is to map every currently used character in every human language (and some historic scripts) to a single code point. Trying to fix that, the Unicode standard was started in the 1980s. Losing information and data corruption was common because programs tried to manipulate text using the incorrect encoding for a file.Įven showing the file content was a mess, because text files don't include the name of the encoding that was used to encode its content. Those language-specific encodings worked reasonably well for some situations but failed miserably in multi-language contexts. ISO-8859-1 adds some extra symbols to ASCII and many accented letters like Á, Ú, È, and Õ. ASCII is a 7-bit encoding, but computers store data in 8-bit blocks called bytes, so many 8-bit encodings were created, extending the first 128 ASCII characters with another 128 language-specific characters.Ī very common encoding is ISO-8859-1, also known by several other names like "latin1", "IBM819", "CP819", and even "WE8ISO8859P1". It includes the basic Latin characters (A-Z, upper and lower case), digits, and many punctuation symbols, besides some control characters. It may look like it's a simple mapping, but several encodings were created in the last decades to accommodate many computer architectures and different character sets. An encoding is a table that maps each code point to its corresponding bit string. Then each character is assigned a code point: a number that identifies it in a one-to-one relationship.įinally, each code point may be represented by a sequence of bits according to an encoding. The subject of encoding text characters using computers is vast and complex and I'm not going to delve deeply into it in this short article.Ĭonceptually, text is composed of characters: the smallest pieces of meaningful content - usually letters, but depending on the language there may be other, very different symbols. It adds some utility methods like concat(), allocUnsafe(), and compare(), and methods to read and write various numeric types, mimicking what's offered by the DataView class (eg: readUint16BE, writeDoubleLE, and swap32).

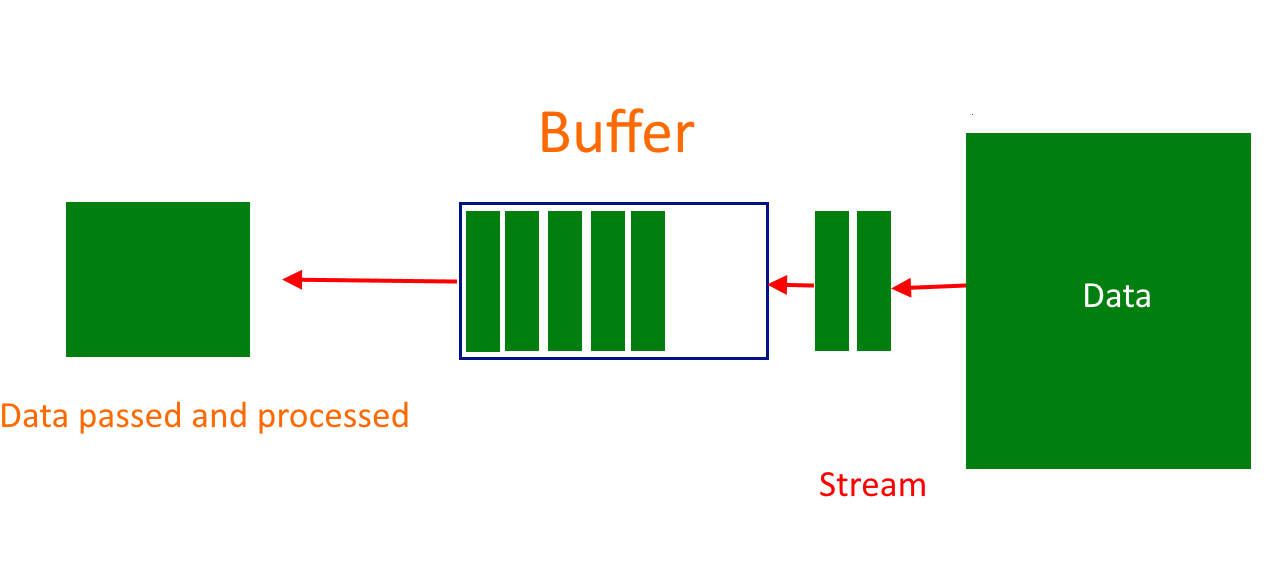

In the Node.js world, there's another class, Buffer, that descends from Uint8Array. It allows handling binary data such as image files, networking protocols, and video streaming in a way that is similar to how it's done in other programming languages.

Of all typed arrays, the most useful probably is Uint8Array. Float64Array, for example, represents an array of 64-bit floating-point numbers Int16Array represents an array of 16-bit signed integers. That changed with ECMAScript 2015, when typed arrays were introduced and allowed better handling of those cases.Īll these types descend from an abstract TypedArray class, each one specializing in specific integer word sizes and signing, and some providing floating-point support. For a long time, JavaScript was lacking support for handling arrays of binary data.

0 kommentar(er)

0 kommentar(er)